In 5 days, we prototyped a service which will allow Medical Assistants (MAs) to digitally record their training and experiences and tested it with 8 users.

About NELSON

The Royal Navy’s NELSON is a team of user researchers, designers, engineers and scientists, from the military, Civil Service and industry.

We help the navy to create intelligent digital services that meet user needs and support its digital transformation.

We use short workshops and discoveries to understand user needs and the constraints that might affect new or improved services in the navy.

The Royal Navy Medical Service (RNMS)

The RNMS provides Agile, flexible, and responsive support, to operations and employs around 1500 people including Doctors, Nurses, Dental Officers, Dental Nurses, Medical Services Officers, Allied Health Professionals, and Medical Assistants.

A Medical Assistant (MA) in the Royal Navy provides healthcare, first aid and training, ashore and at sea, to all ships and submarines, and to the Royal Marines Commandos and other units.

There is no role within the NHS directly comparable to the MA due to the diverse skill set they deliver. MAs provide primary and secondary care, occupational health, pre-hospital trauma life-support, medical administration, disease prevention, and basic environmental healthcare, often in remote isolated locations.

RNMS & NELSON: Migrant data

NELSON has been working with the RNMS for about 18-months - RNMS saw how better data and digital services would enhance the services they provide.

In 2018, working together with Solent University, School of Media, Arts, and Technology, we analysed some of the data that had been taken from a migrant rescue mission in the Mediterranean. We wanted to see how this data could help RNMS to further improve their services for future humanitarian operations.

This work highlighted that without good quality data, deriving value from automation and machine-learning, is almost impossible.

RNMS First Look

In June 2019, we ran an RNMS ‘First Look’ to understand a broad set of non-clinical needs, frustrations and opportunities, some highlighted by the failure to exploit the migrant crisis data.

We identified over 20 opportunities and prioritised them based on expected benefits, complexity of each problem, and how easy they might be to fix. Our prioritisation was far from perfect, but faced with lots of opportunities, we decided it’s sometimes better just to start and learn as you go.

The prioritisation identified 2 unmet needs:

- MAs record their training and experience on paper - this is time-consuming and records aren’t credible outside the military because of the way they are recorded.

- RNMS cannot systematically ensure that their MAs have the skills and experience they need to support operations like the humanitarian crisis.

NEXT...

With financial backing from the Royal Navy’s Chief Technology Officer (CTO), we decided to use a design sprint to understand these needs and to gain experience. We considered running a discovery, which would have given us a more thorough understanding of needs and constraints. However, we decided that testing with users was more beneficial as a first step to digital transformation.

What is a Design Sprint?

A design sprint is a way to answer questions through designing, prototyping, and testing ideas, with users. Design sprints are 4 - 5 days long and use a structured workshop format, created and honed by Google Ventures and others.

The participants include a facilitator, designers, and a range of people, who care about the subject area. Below is a list of online links to resources, for those interested in learning about a Design sprint. Start at the top of the list for an overview and scroll down to understand more:

- The Design Sprint and Miro will show you two images which outline the format.

- Watch a 90-second video from one of the design sprint creators.

- Here is an overview provided by Google Ventures and a link to more resources.

- Read the Google Ventures design sprint book on Amazon.

- Read an overview of the 4-day design sprint from AJ&Smart (who have iterated the original Google Venture format: design sprint 2.0).

- Sprint Stories, DWP Digital and MOJ Digital & Technology have written good blog posts about design sprints in the UK government.

- Ux Planet is a discussion about design sprints and the GDS agile delivery phases.

Monday

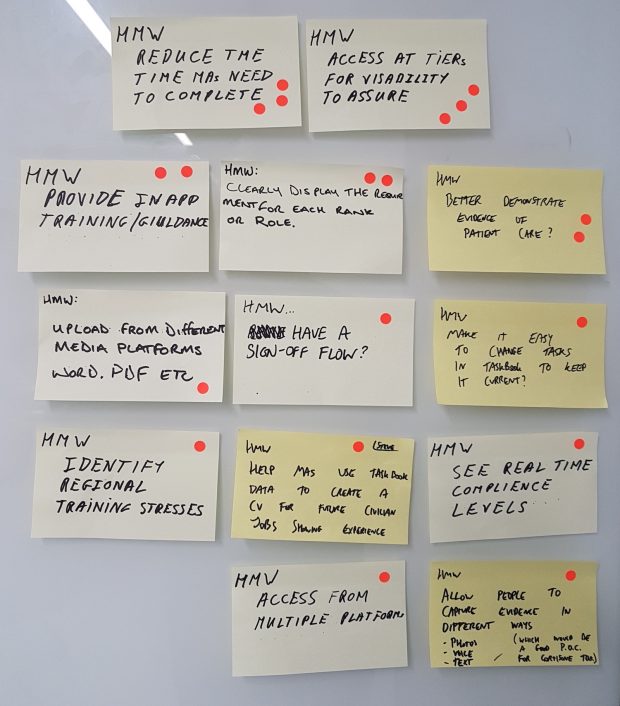

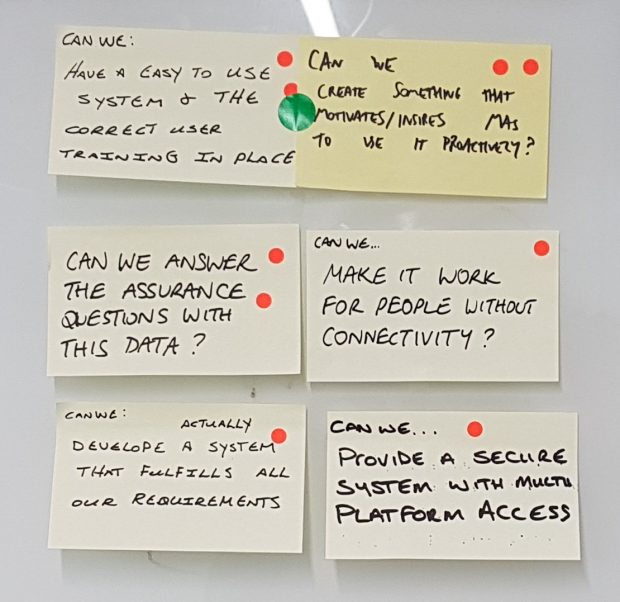

We defined the challenge, spoke with a number of experts and asked lots of questions to capture ideas, problems and uncertainties, and wrote them on Post-its in the format 'How Might We…?'

We set a 2-year goal to define and prioritise sprint questions. This sprint focused on answering these questions through the prototype and user testing that we had carried out. The 2-year goal and our top 6 sprint goals are shown below:

The training and experience of all MAs is held and assured digitally. MAs love using the service which is part of a wider Medical system that is drawn from one data source.

Tuesday

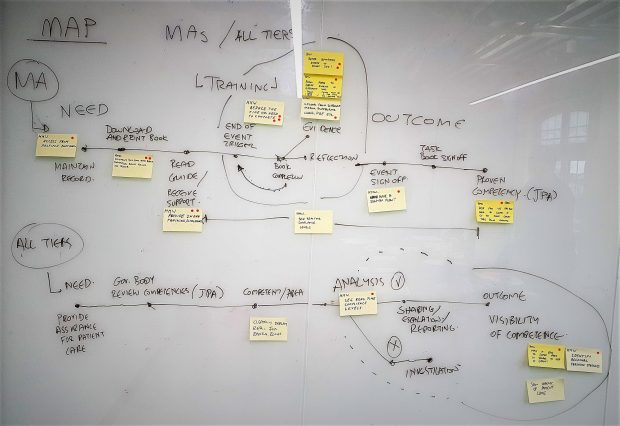

We generated simple ‘as-is’ journey maps to show what MAs need in order to record activities in a task-book (top flow). See how the RNMS assures the skills and experiences of its MAs (bottom flow).

We researched other apps and services to help us with design inspiration and began our idea-generating process. We took notes, doodled, used a technique called ‘crazy-eights’, and drew storyboards, to understand what is needed for recording and reporting MA competencies.

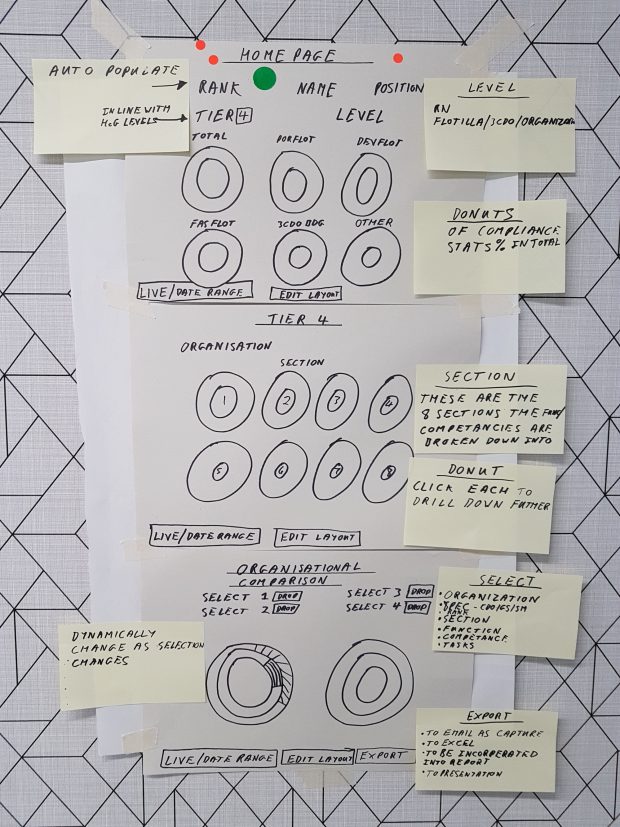

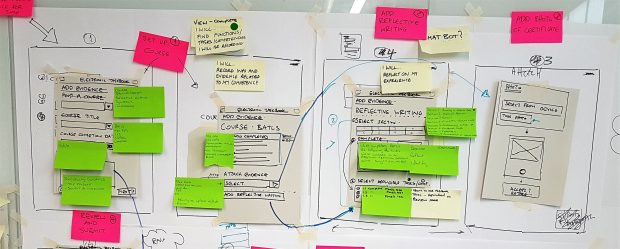

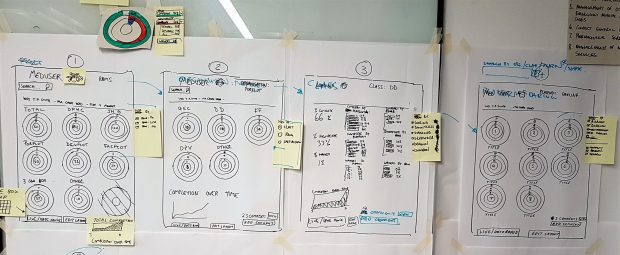

It was hard to vote for a single MA competency recording storyboard, so we put several ideas into the journey map below. The MA competency reporting voting was more conclusive, so a photo of the winning 3-part storyboard is below:

Wednesday

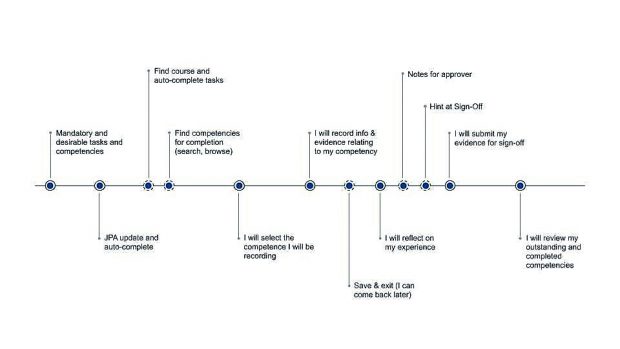

We created our final set of storyboards. We refined the flow, added copy and graphics, and planned the user testing. Our refined storyboards for MA competency completion and competency assurance/reporting can be seen below:

Thursday

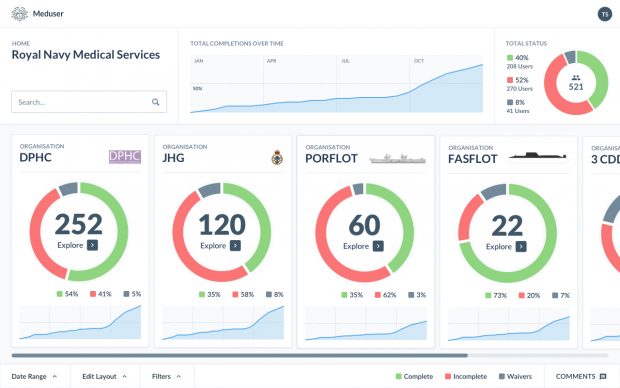

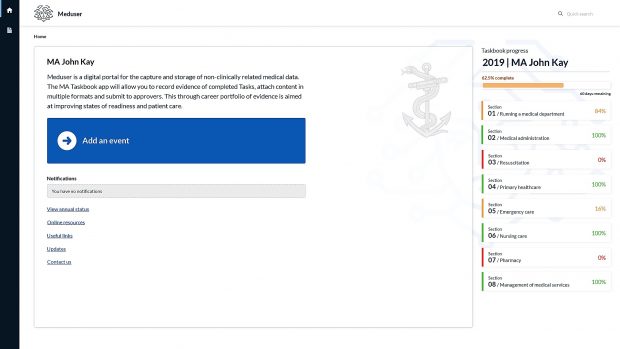

On Thursday (and some of Wednesday), NELSON’s in-house designers transformed the storyboards into two awe-inspiring, high-fidelity prototypes, while the rest of the team either prepared for the user-testing or returned to other work. Here are some of the screenshots from the prototypes, ready for testing with users:

Friday

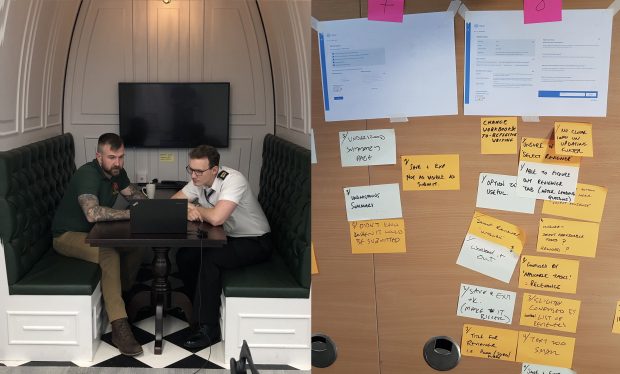

We tested the two prototypes: the MA Task book was tested with 6 users and the Competence Reporting Dashboard was tested with 2 users.

These were led by NELSON staff and Royal Navy and Royal Marine participants to help everyone to gain experience in user testing. The rest of the team observed and listened-in remotely, to understand the user’s experience.

User Feedback

The Good

The feedback we received was detailed and positive. Users loved the service and highlighted how a live service would transform the way they track, analyse, and assure, MA competence.

What didn’t work?

Users found a couple of things confusing. For example, how we represented task completion and the difference between generic and core tasks.

Users also suggested approximately 30 minor improvements, including: increasing the font size, explaining the meaning of colours, and including other types of reflective accounts. All positive and negative feedback has been captured for future work.

Outcome

On Monday, we established 2 sprint questions - these are repeated below with answers:

Q1: Can we create a digital MA Task book that MAs can use without any training, assistance, or on-screen guidance?

Yes, users were observed going through the two prototypes with little guidance. Their feedback highlighted the clarity, simplicity, intuitiveness, and user-friendliness of the prototypes.

Q2: If the Royal Navy made the completion of the MA Taskbook voluntary, would the users still want to complete it?

User 1: Yes, I would use it as a digital folder to keep track of what he has done.

User 2: Yes, I would use it to stay on top of training and progress.

User 3: Yes, to keep all certificates from the courses.

User 4: Yes, he can take this around with him and it won’t get damaged or lost.

User 5: Yes, it would be good practice to keep a track of personal performance.

User 6: Yes, it is a good way to keep a portfolio of work and achievements, you can easily keep every course and little thing that you have done.

Things we liked about the design sprint

- It’s immersive - living and breathing a problem for a week, enables real understanding.

- The team - co-location and collaboration for a week, allows a team to develop.

- Focus - my time is often split between projects, so focusing on one thing was satisfying.

- User centred - the design sprint is a very different way of working for the Royal Navy. It demonstrates that testing anything with users, is better than waiting to test what we might think, is a final thing.

- Testing experience - we’ve done lots of user research in NELSON, but this type of interactive testing and observation has been limited. It’s great to build up experience in the navy for this sort of testing.

Limitations

Constraints. Design sprints allow us to answer specific questions by testing with users, however, in organisations like the Royal Navy it’s the constraints that are more likely to stop or slow down delivery.

For example, we haven’t yet explored how we’ll authenticate users? Whether we can integrate with other systems? Where a service might be hosted and whether there are any show-stopping data privacy constraints?

Biases. The design sprint format tries to reduce biases in decision making through standardised writing styles, lone working, and decision-making roles, but biases still creep in.

‘Lost’ ideas. The design sprint quickly gets to a set of storyboards to test with users. In doing so, several perfectly viable ideas, are justifiably not pursued. As part of the design sprint wash-up, it might be worth capturing some of these ideas for future delivery teams to investigate and to test with users.

Next Steps

While design sprints and the Government Digital Service (GDS) approach both emphasise user-centred design, the design sprint doesn’t neatly fit into a single Service manual phase, which makes it hard to identify a clear next step.

The MA task book design sprint allowed us to learn some user needs (as we would in discovery) and allowed us to test ideas with users (as we would in alpha). But, we did not, systematically research user needs or investigate potential constraints on the service.

We did however demonstrate a possible template for future capture of training compliance, assurance of professional delivery, and identification of training shortfalls.

We’ve identified 3 next steps which we believe are necessary to get the service into alpha. We will:

- Identify additional user needs and then validate and prioritise all the needs we’ve discovered.

- Start to define some key performance indicators for the service.

- Research the constraints that we need to consider in designing the MA task book and other non-clinical digital services.

To find out more, visit the About NELSON page, the NELSON Standards design system, contact the NELSON team at navyiw-nelson@mod.gov.uk or visit our Twitter for our latest news.

Leave a comment