Knowing where to start is a challenge

The Royal Navy's NELSON is made up of a team of user researchers, designers, engineers and scientists from the military, Civil Service and industry. We help teams in the Royal Navy create intelligent digital services that meet user needs and support the Royal Navy’s digital transformation through strategy and delivery advice.

We’re building the NELSON Data Platform to store, process and publish data with a design system that will make user experience consistent across applications. The programme uses Government Digital Service’s (GDS's) Service Manual to develop digital services that are phased, iterative and user-centric, according to Agile principles.

In the early phases (called discovery and alpha), a team will identify user needs, understand risks, and develop and iterate prototype designs which can be used to get feedback from users early and often. Discovery typically takes between 6 and 8 weeks but will ultimately depend on the complexity and scope of the service that is required.

The Royal Navy recognises that accessible data, digital services and intelligent applications are key enablers for future capabilities. The navy runs lots of applications that were developed when open architectures, data standards, web services and user-centred design (UCD) practices were less mature.

Furthermore, as threats and the Royal Navy’s objectives have changed, other needs have emerged. Faced with limited resources, an operational imperative and a large number of opportunities, our first digital transformation challenge is knowing where to start?

We wanted to make sure that the projects that we take on are the most important ones to do right now. In this way, we can highlight any obvious red flags that would cause a project to fail before investing money, time and reputation, into a full discovery.

It’s worth saying that we’re not the first team in government to write about ‘pre-discovery’. The Department for Environment, Food and Rural Affairs (Defra) blogged about it in 2017, setting out the problems they faced and what they learned along the way, which inspired us to do the same.

Understanding ‘just enough’ helps us decide where to start

Our solution was a process that we call a ‘First Look’. This is a 2-day pre-discovery workshop that brings together stakeholders with digital experts in a structure that allows us to understand just enough about the subject to recommend the next steps.

A First Look helps us to learn:

- What the participants and their organisation are aiming to do?

- A high-level of understanding of our users, their needs, and their organisational structures.

- The full range of data types, data sources and IT systems that are available to us, and their interdependencies.

- The digital maturity and expectations of the users and stakeholders.

- How current user needs are met, and if not, why they are not met?

- What is being assumed about user needs and how those needs will be addressed?

- How the Royal Navy might benefit from addressing the problem or unmet needs?

Helping participants plan their next steps

First Looks also help participants understand what the next steps might be and what is needed to start:

- Given the size and the complexity of the subject areas, how can the work be broken down?

- What work should follow the First Look and will that work be linear?

- Which key dependencies need to be addressed? For example, identifying a senior sponsor or a product owner, sourcing funding or initiating cultural changes.

- How can we deliver any follow-on work? For example, can we use the resources of the Royal Navy or do we use contractors for the digital delivery service support?

The First Look format and how we facilitate the sessions

The First Look workshop format borrows ideas and details from the Google Venture Design Sprint and other techniques used in good workshops. We think through and set out an agenda for each First Look. We have found it is important to be flexible and adapt as we go along, spending more time where it’s really needed.

Session length

First Look sessions can take between 1 and 2 days, depending on what we know about the subject. They are informal, interactive and fun but different to the usual Royal Navy business, as we have found this combination helps people to share what they know. We’ve found that it is useful to have an overnight break, so that people can have time to think things through and to refresh their ideas.

Ground rules

At the start of the first session, we lay out some basic ground rules to help people collaborate, to stay engaged, keep off personal devices, not waffle when they talk, challenge ideas and not people, and not to talk over each other. We try to keep the group to fewer than 10 people as we have found anything more is unwieldy and counter-productive.

Tools and techniques

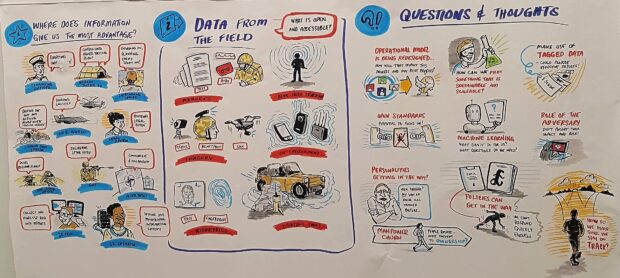

In most sessions, we use whiteboards or canvases of brown paper stuck onto walls to get people moving and talking. The only thing on a screen is the agenda. We keep all of the content on the walls as we work, to provide us with quick visual and location references to previous discussions. We also make sure we refer back to some outputs such as goal statements to check for quality and scope in other exercises.

We use journey maps, hand-drawn social network graphs, data-modelling exercises, press release games and persona definitions, to tease out the information from people. Many of these techniques are new to most participants. So, we spend time at the beginning of each session to explain what we’re going to do and why, and then use past examples to demonstrate what we’re aiming for.

Making everyone’s contribution count

We stock up on tea, coffee, water, fruit and snacks to keep people fuelled and engaged, and ask participants not to wear uniform in an attempt to flatten the structure and value everyone’s input equally. We also make sure facilitators prompt participants and give equal opportunities, so that everyone can contribute.

To ensure wide contribution and honest consensus despite rank structures, we often gather inputs via Post-its on walls to help anonymity and equality of contributions, and we use dot votes for decisions. We have borrowed some of these techniques from Liberating Structures. Our facilitators also use breaks in each session to make observations and adapt the agenda.

Participants

There is usually a single person or a team that proposes the First Look and identifies the participants with a little guidance. We typically ask for a range of budget holders or responsible stakeholders, subject matter experts and end users.

Participants have included operators, analysts, people from different parts of the Royal Navy and the Royal Marines, as well as equipment manufacturers who are needed to help shape future direction.

NELSON’s First Look team consists of data engineers, data scientists, systems engineers, user researchers and facilitators. They operate as a team, leading sessions and joining group activities to provide advice and to make observations.

First Look outcomes and outputs

We aim to ensure that workshop participants understand more about their problem and have a clear direction on how to proceed.

Before and during the workshop we ask whether participants need any particular artefacts to support follow-on work, such as a funding business case.

After the workshop, we collate our notes, photos and observations, for teams who may be involved in subsequent Agile delivery phases. The facilitation team also completes a document that helps us to judge how suitable the project is for NELSON and provide structured recommendations and feedback to the participants.

These structured recommendations are one of the main carrots we can offer to Royal Navy stakeholders to convince them that a First Look workshop is worth 2 days of their time, even though we can’t guarantee their project will be picked up afterwards.

The less tangible output that we see is that each time we run the workshop, we finish with a group of stakeholders who know much more about what the user-centered design process feels like, having run through many exercises themselves.

This helps us when we take projects to discovery as everyone has a baseline understanding of what work is involved and it helps our programme to spread a user-centred design culture across the organisation, as all of the participants return to their work areas having enjoyed and learned from the sessions.

Iteration

At the end of each First Look we run a short Post-it-based retrospective with the participants, using 2 simple questions: ‘what went well?’ and ‘what didn’t go so well?’.

More recently, the NELSON team has also run a longer retrospective bringing together participant observations with our own recommendations of specific things we will research or try next time.

The retrospectives allow us to continuously improve the First Look, leading to changes in the order of sessions, the expertise we have in the room, how we capture outputs, and how we practise and run large mapping exercises.

Now and next

We ran 4 First Looks over the winter of 2018 and spring 2019 and have 4 others scheduled for autumn 2019.

Here is how the First Looks turned out:

- 2 of them led to full discovery phases

- 1 went straight into an alpha phase which we ran a little like a design sprint

- 1 has been paused until further funding

Over the next 6-12 months, we will check alignment with programme goals, develop a multi-disciplinary pool of people who enjoy facilitating, continue to iterate based on participant feedback and possibly collaborate with external facilitation experts to improve the format further.

We’re quite proud of what we’ve put together here, as a solution to our issues of building cultural capital to try Agile working and triaging our backlog of projects. We know it can be improved though – not just with feedback-based iteration but with expert discussion too. As we found when we started, there isn’t a lot of talk out there about pre-discovery in Agile.

We hope that some of our lessons can be useful for others in government who face similar issues. More importantly, we would love to hear from anyone who wants to share their experiences or would like more information. Please leave your comments below.

NELSON is changing the way the Royal Navy uses its data.

To find out more, visit the About NELSON page, the NELSON Standards design system, contact the NELSON team at navyiw-nelson@mod.gov.uk or visit our Twitter for our latest news.

Leave a comment