First Looks are the Digital Discovery Assessment Branch’s (DDAB’s) way of assessing whether a project is suitable to proceed onto Discovery.

In Part 1, we covered the problems we had with assessing a submitted project’s potential for Discovery in an efficient manner and how we decided to test ways to quickly give a good enough dive into the problem.

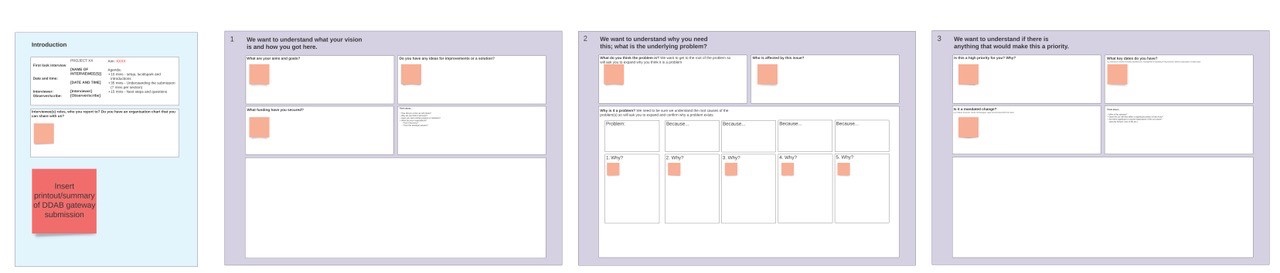

In this section we will go over the top principles and question areas in our initial conversations with project owners, which we called semi-structured interviews.

Semi-structured interview as the core of our method

The main method we used to carry out our First Look was the semi-structured interview. That is, an interview with general questions to answer but not a set of questions that must be rigidly adhered to.

To get the best out of a session we needed to prepare the interviewees. Not only did we want to ensure we spoke to the most knowledgeable individual(s) but it was also a way to introduce our ways of working, why we needed an interview and our principles (eg we work in a solution-agnostic way).

Along with the initial chat we also ensured that we had a detailed meeting agenda. We included links to the board and asked for relevant documents to be sent ahead of the meeting.

We also let them know that we planned to record the session to give us a chance to prepare if they were not happy with this.

Top 7 principles for running a First Look semi-structured interview

After a couple of test runs we decided on these key principles:

- We would use a whiteboard as the simplest way to take notes.

- due to the broad nature of our questions it was likely that answers would jump around our questions so it was simplest to use one.

- We used (virtual) sticky notes for answers, one answer per note.

- and afterwards we could group these answers (sometimes known as affinity mapping) to summarise the answers

- We would work in the open.

- not only would we screen share out note taking but we share a link to the whiteboard in the meeting invite and follow up afterwards for interviewees to review

- We would only interview the one or two people with the most knowledge.

- rather than risk having a group chat that may be inefficient

- The whiteboard had to look professional, clear and easy to follow.

- we also made it into a PowerPoint format for those who preferred working that way

- We used open questions

- that is, we avoided questions that could lead to yes/no answers, in line with common user research principles

- We would summarise our interviews as soon as possible.

- we would also share it for comment by interviewees

The 6 key areas of information we needed by the end of a First Look semi-structured interview

You can see the board for yourself here - the main sections were based on what we needed to know by the end.

The first two sections were basic admin for us:

- What’s their vision for the project?

- why did they contact DDAB, what do they want to achieve and why, do they have any funding?

- What is the problem?

- who is affected by it, why, what is the impact of the problem?

- what happens if the problem isn’t solved?

- Why is this a priority?

- What key dates do we need to be aware of, who is supporting this project, are there any legal, policy or other PESTLE changes we need to be aware of, how many users are affected by it?

- What blockers could the DDAB team face?

- do solutions have any technical constraints (eg must use a certain system), are there any barriers for the team (eg certain people unavailable, work can only be caried out at certain locations)?

- what work has been done in the past and what problems did they face?

- what help do they need from DDAB?

- Who are the key people?

- who owns this and are they our main point of contact, who has the greatest understanding (not always the same as the current owner), what skills and support can their team offer (eg a developer)?

- are there similar projects we should contact?

- Next steps and questions

- overview of how we work and what Agile is (ie solution agnostic)

- what happens next

- what we need from them, what we expect of them as a delivery partner/product owner/service owner

- any feedback on the process or questions they may have

Summarising sessions

We aimed to summarise a session as soon as possible. This meant that information was still fresh and we could check it with interviewees. It also meant that any team members who couldn’t make the call were aware of what was covered.

It also meant we could create themes for the problem and identify where we needed more information. We could draft a vision for it if we felt confident but the aim of the session was breadth of information rather than depth.

Team review

After the interview the team reviewed the outputs to determine what we should do next, which we call triage. In some cases it was to go into Discovery but in some we went into a Lean UX canvas to get more information to add depth to the problem, users and stakeholders.

In other cases we discussed the willingness to work in a solution agnostic manner. If thepotential partner had a solution in mind and only that solution it could cause tension. As a team we made the decision and met the interviewee for what to do next.

With so many potential projects we had to be ruthless to get through the work.

Final output

By the end of the 4 week First Look session we produced a Problem Profile – basically a PowerPoint that summarised the work we carried out and laid out the options for next steps.

The next steps involved collaboration, with the submitter deciding from the options which to proceed with. We give options to the submitter as ultimately it is their project and not for us to decide for them what happens next. But any option we do give must have evidence and reasoning.

How we are reviewing and refining First Looks

To get to this stage took a lot of discussion, iteration and refining and that work continues. Part of this is sharing it, first with the cross Defence UX community and now with this blog.

We continue to review and update each time we use the First Look template. Further problems we are looking at include:

- time taken form First Look to next steps – should we treat it like a week-long design sprint and have sessions pencilled in already before we review then decide next steps? That is it’s better to cancel than to set up?

- are we asking all the right questions? What can be trimmed, refined or added to?

- how to avoid Discovery creep – some First Looks did not end but slowly merged into a Discovery, this can work as long as Discovery kick off tasks (eg research plans) are still carried out

- how can the First Look be quickly spun up to a full Discovery where appropriate?

We continue to work in the open and to open up our backlog and Kanban boards. As part of this we want to give better expectations of timings for submitters and where they are in the queue, along with reviewing the information we expect submitters to give on the gateway.

Leave a comment